Virtual Production

-

Year2019

-

Timeframe6 months

-

Team3 people and 8 vendors

-

GoalEstablish a virtual production practice at WB

Background

In the Media & Entertainment industry, virtual production generally refers to the combined use of game engines and motion capture technologies to achieve some goal in a visual effects (VFX) pipeline. A production's specific goal will vary based on the team's needs, ranging anywhere from pitchvis to rendering final pixels to an LED display onset. If you're interested, I highly recommend reading Epic Games' Virtual Production Field Guide to learn more!

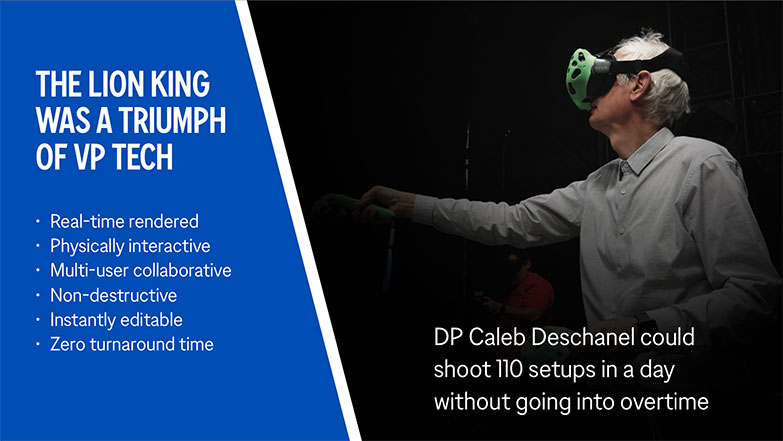

Virtual production was a hot news topic in 2019, especially surrounding Disney's The Lion King (2019) and The Mandolorian productions, so WB hired expert consultant Mike Seymour from the University of Sydney to write an executive brief on the topic. In parallel with Mike's paper, I was tasked with building a "virtual production sandbox" as an R&D facility where we could remotely collaborate on research with Mike's Sydney-based lab.

From July to December 2019 I worked with Mike and eight technology vendors on various virtual production workflow prototypes, including Performance Capture, Virtual Camera, Virtual Scouting, and Asset Pipelines. We presented our results in Q1 2020 to a wide range of audiences including New Line Cinema, AT&T Business Strategy, Rob Legato, and WB's Facilities, VFX, and Technology teams.

Slide from my final presentation highlighting virtual production on The Lion King

Performance Capture

Performance capture is an animation process in which the body and face of a live actor are captured and retargeted onto a digital character, either in a pre-recorded workflow or as a live stream. Often separate technologies are used to capture the face and body, and a production's Technical Director will ensure that all the animation data is configured correctly for the teams's needs.

I led several performance capture workflow tests with a range of hardware including OptiTrack skeletal tracking, Xsens MVN Link, Faceware head-mounted camera systems, and Apple ARKit face tracking. I selected these technologies to allow us maximum flexibility within our project budget, equipping us to handle shoots either on-location or in our MoCap stage, and either live-streamed or pre-recorded.

Our associate engineer demonstrates a mobile performance capture rig

Virtual Camera

For productions using any kind of CG rendering pipeline, it is often desirable to have a cinematographer framing shots and moving the camera with a Virtual Camera System (VCS), as opposed to having an animator keyframe the camera motion by hand. I evaluated a few different VCS products including the OptiTrack Insight VCS and Glassbox Dragonfly, but ultimately decided to build my own VCS rig after receiving some great feedback during a workshop with Rob Legato.

My VCS rig integrated some existing components from the Insight system, but included several bespoke upgrades to make the rig wireless, including Teradek Bolt 500 video streaming and a bespoke Live Link integration to stream data from USB peripherals.

Testing OptiTrack active markers on an early version of my VCS rig

Virtual Scouting

Virtual scouting is perhaps the most intuitive Virtual Production workflow, in which Directors, Cinematographers, Gaffers, and others can plan production using a real-time rendered 3D visualization of a location. Often involving both virtual reality technologies and LiDAR or photogrammetry scans of real locations, virtual scouting is a state-of-the-art workflow that allows a production team to lock in key planning details like lens choices, time of day, placement of cameras, lights, cast, and crew, and more. With more detailed planning upfront, expensive days on set can be more efficiently spent, as key creative decisions have already been made.

I chose not to spend as much effort on virtual scouting tools, as WB's productions were more likely to hire dedicated teams for their needs than to invest in our small R&D toolset. For our sandbox, I integrated the Unreal Virtual Scouting tools presented at SIGGRAPH 2019, as Unreal's Multi-User Editor feature met our team's base requirements for online collaboration.

Caleb Deschanel scouts a virtual location for The Lion King

Asset Pipelines

While shiny technologies are fun to talk about, a production's asset pipeline is the foundation upon which everything else is built. Especially for live-streamed performances where there are no second chances, the production team needs absolute confidence that the models, rigs, shaders, animation blueprints, and system architecture will be rock-solid for the duration of the production.

For our demo assets, I hired Juan Carlos Leon as a consulting Technical Director, and together with Glassbox Technologies we created a reusable rig and animation blueprint for face and body performance which could be flexibly tailored to different actors. In other words, we developed a robust asset pipeline that met the needs of both live-streamed and pre-recorded productions.

Work-in-progress skin test for a generic character asset we created

Where are we today?

Unfortantely due to the COVID-19 pandemic, I am currently working from home without access to most of our virtual production gear. In the meantime, I remain involved in virtual production as a sponsor of the USC Entertainment Technology Center and in the Academy Software Foundation's USD Working Group.